CapLoader 1.8 Released

We are happy to announce the release of CapLoader 1.8 today!

CapLoader is primarily used to filter, slice and dice large PCAP datasets into smaller ones. This new version contains several new features that improves this filtering functionality even further. To start with, the “Keyword Filter” can now be used to filter the rows in the Flows, Services or Hosts tabs using regular expressions. This enables the use of matching expressions like this:

- amazon|akamai|cdn

Show only rows containing any of the strings “amazon” “akamai” or “cdn”. - microsoft\.com\b|windowsupdate\.com\b

Show only servers with domain names ending in “microsoft.com” or “windowsupdate.com”. - ^SMB2?$

Show only SMB and SMB2 flows. - \d{1,3}\.\d{1,3}\.\d{1,3}\.255$

Show only IPv4 address ending with “.255”.

For a reference on the full regular expression syntax available in CapLoader, please see Microsoft’s regex “Quick Reference”.

One popular workflow supported by CapLoader is to divide all flows (or hosts) into two separate datasets,

for example one “normal” and one “malicious” set.

The user can move rows between these two sets, where only one set is visible while the rows

in the other set are hidden.

To switch which dataset that is visible versus hidden the user needs to click the

Readers with a keen eye might also notice that the purple bar charts are now also accompanied

by a number, indicating how many rows that are visible after each filter is applied.

The available filters are:

NetFlow + DNS = Great Success!

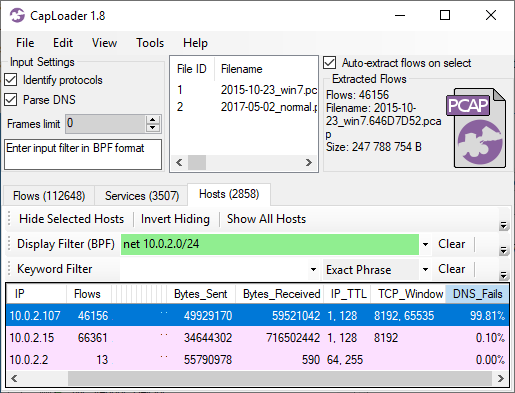

CapLoader’s main view presents the contents of the loaded PCAP files as a list of netflow records. Since the full PCAP is available, CapLoader also parses the DNS packets in the capture files in order to enrich the netflow view with hostnames. Recently PaC shared a great idea with us, why not show how many failed DNS lookups each client does? This would enable generic detection of DGA botnets without using blacklists. I’m happy to announce that this great idea made it directly into this new release! The rightmost column in CapLoader’s hosts tab, called “DNS_Fails”, shows how many percent of a client’s DNS requests that have resulted in an NXRESPONSE or SRVFAIL response.

Two packet capture files are loaded into CapLoader in the screenshot above; one PCAP file from a PC infected with the Shifu malware and one PCAP file with “normal traffic” (thanks @StratosphereIPS for sharing these capture files). As you can see, one of the clients (10.0.2.107) has a really high DNS failure ratio (99.81%). Unsurprisingly, this is also the host that was infected with the Shifu, which uses a domain generation algorithm (DGA) to locate its C2 servers.

Apart from parsing A and CNAME records from DNS responses CapLoader now also parses AAAA DNS records (IPv6 addresses). This enables CapLoader to map public domain names to hosts with IPv6 addresses.

Additional Updates

The new CapLoader release also comes with several other new features and updates, such as:

- Added urlscan.io service for domain and IP lookups (right-click a flow or host to bring up the lookup menu).

- Flow ID coloring based on 5-tuple, and clearer colors in timeline Gantt chart.

- Extended default flow-timeout from 10 minutes to 2 hours for TCP flows.

- Changed flow-timout for non-TCP flows to 60 seconds.

- Upgraded to .NET Framework 4.7.2.

Updating to the Latest Release

Users who have previously purchased a license for CapLoader can download a free update to version 1.8 from our customer portal. All others can download a free 30 day trial from the CapLoader product page (no registration required).

Credits

We’d like to thank Mikael Harmark, Mandy van Oosterhout and Ulf Holmström for reporting bugs that have been fixed in this release. We’d also like to thank PaC for the DNS failure rate feature request mentioned in this blog post.

Posted by Erik Hjelmvik on Tuesday, 28 May 2019 10:45:00 (UTC/GMT)

Tags: #CapLoader #NetFlow #regex #DNS #DGA #Stratosphere