PolarProxy 0.9 Released

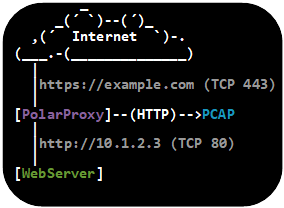

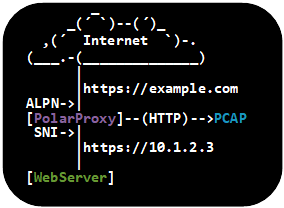

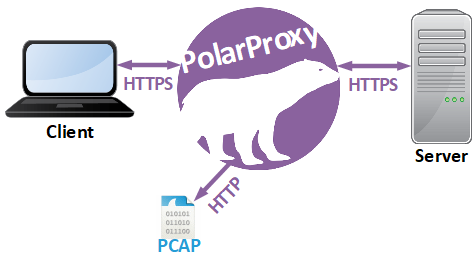

PolarProxy was previously designed to only run as a transparent TLS proxy. But due to popular demand we’ve now extended PolarProxy to also include a SOCKS proxy and a HTTP CONNECT proxy. PolarProxy automatically decrypts all proxied SSL and TLS traffic, regardless if the remote server is running on TCP 443 or some other port, as long as the traffic passes through PolarProxy. As from now we also release a Windows build of PolarProxy, alongside the Linux x64, ARM and ARM64 builds.

SOCKS Proxy

Use the command line argument “--socks [port]” to start PolarProxy’s SOCKS proxy server. This SOCKS proxy supports multiple versions of the SOCKS protocol, including SOCKS 4, SOCKS 4a, SOCKS 5 and SOCKS 5h.

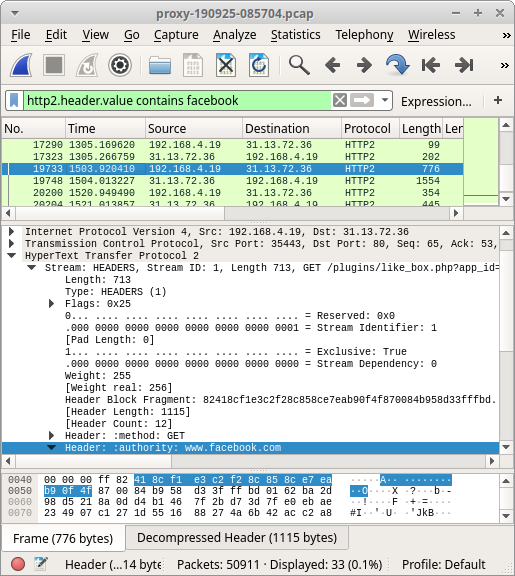

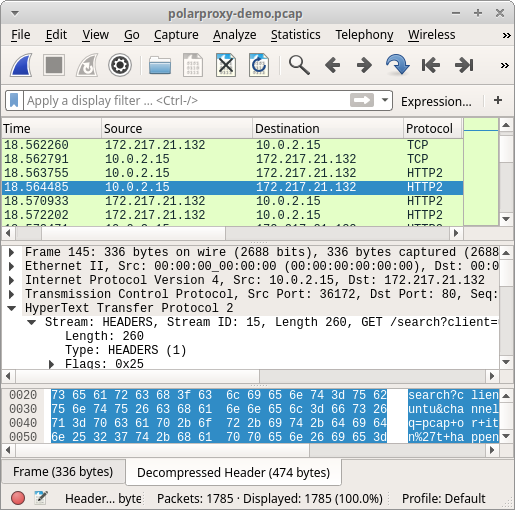

As an example, the command below starts a SOCKS server on TCP port 1080 and passes a copy of the decrypted TLS traffic as a PCAP stream to tshark.

You can then use curl to run some HTTPS traffic through the SOCKS proxy:

After doing this you should see the decrypted HTTP/2 traffic in tshark’s output.

HTTP CONNECT Proxy

We’ve also added a HTTP proxy to PolarProxy 0.9, but it only supports the CONNECT request method. This means that normal unencrypted HTTP requests, like GET or POST requests, will be rejected by PolarProxy. Most web traffic is TLS encrypted nowadays anyway, so we don't consider this limitation to be a big issue.

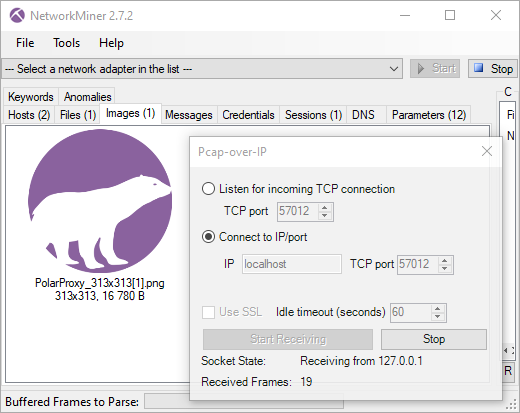

The HTTP CONNECT proxy service is activated with the “--httpconnect” argument. Decrypted TLS traffic from PolarProxy’s HTTP CONNECT proxy can be forwarded to tshark just like in the SOCKS example, but the traffic from these proxies can also be accessed through PCAP-over-IP like this:

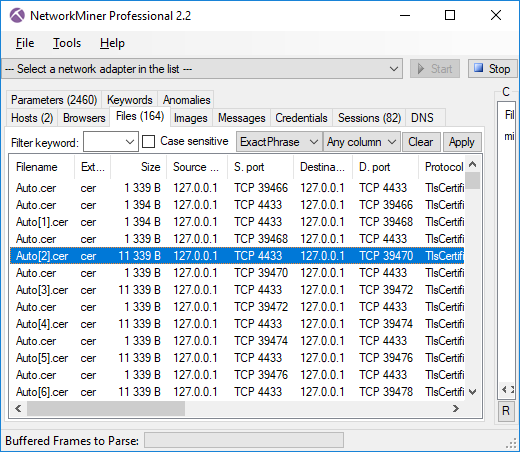

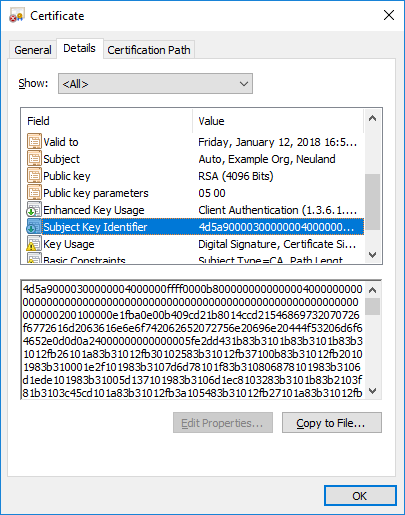

You can then connect to PolarProxy’s PCAP-over-IP service with NetworkMiner by clicking File, Receive PCAP over IP, select “Connect to IP/port”, enter “localhost” and click the “Start Receiving” button. You’ll now be able to see a real-time feed of all the traffic that PolarProxy decrypts. As an example, let’s download the PolarProxy logo over HTTPS to see if NetworkMiner can extract it from PolarProxy’s decrypted PCAP-over-IP stream:

The PolarProxy logo immediately shows up in NetworkMiner’s images tab:

Port-Independent TLS Protocol Detection

When PolarProxy is running as a transparent TLS proxy all incoming traffic can be expected to be TLS. But that’s not the case when, for example, PolarProxy is running as a SOCKS proxy. We have therefore added port-independent TLS protocol detection for proxied traffic, so that TLS traffic can be detected and decrypted even when it runs on other ports than the standard 443, 465, 853, 990, 993, 995 and 5061 ones.

There is one crucial limitation to the automatic SSL/TLS protocol detection though, it doesn’t support explicit TLS traffic that relies on opportunistic encryption features like STARTTLS, which bootstraps TLS into an already established application layer session.

Allow Non-TLS Traffic

SOCKS and HTTP CONNECT proxies can both be used to transport other protocols than TLS. PolarProxy blocks all non-TLS traffic by default, but this setting can be overridden with the “--nontls allow” argument to allow any traffic to be proxied. The allow non-TLS override has no effect on PolarProxy’s transparent proxy though, because it will need to see a valid SNI field in order to know whereto the traffic should be forwarded.

Windows Build

There wasn’t much need for a Windows build of PolarProxy prior to the release of version 0.9, because the Windows firewall can’t be configured to redirect outgoing port 443 traffic to a local service. However, now that PolarProxy also includes SOCKS and HTTP CONNECT services, the situation is completely different. There are many ways to configure a Windows PC, as well as web browsers and other applications, to use a local proxy server.

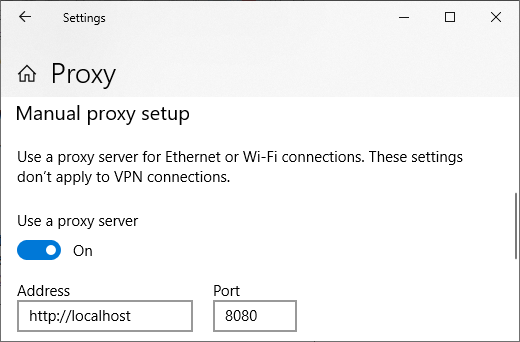

You can use the Proxy settings window in Windows 10 and 11 to enable a local HTTP proxy like this:

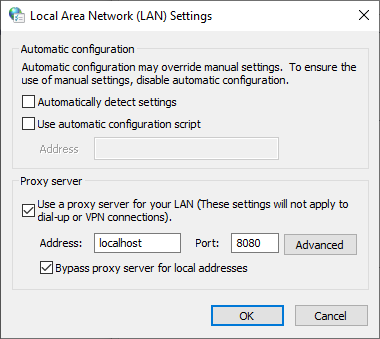

Another option is to run “inetcpl.cpl” (Internet Options), open the “Connections” tab and click the “LAN settings” button to configure an HTTP proxy.

You can, of course, also configure your browser to use a local SOCKS or HTTP proxy in Windows, just as you’d do on any other operating system.

But don’t forget to configure your OS and/or browser to trust your PolarProxy instance’s root CA certificate first, as explained in the “Trusting the PolarProxy root CA” section of our PolarProxy documentation.

The Windows version of PolarProxy is a .NET framework-dependent application, which requires the .NET 6 runtime to be installed. The PolarProxy releases for other platforms (Linux x64, ARM and ARM64) are all self-contained applications, which are published with the .NET runtime built-in.

Visit our PolarProxy page to download and install PolarProxy.

Posted by Erik Hjelmvik on Thursday, 13 January 2022 10:15:00 (UTC/GMT)

Tags: #PolarProxy #proxy #SOCKS #SOCKS5 #TLS #SSL #decrypt #Windows #PCAP-over-IP #pcapoverip

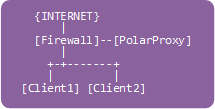

Image: Kibana showing HTTP traffic info from decrypted HTTPS sessions

Image: Kibana showing HTTP traffic info from decrypted HTTPS sessions

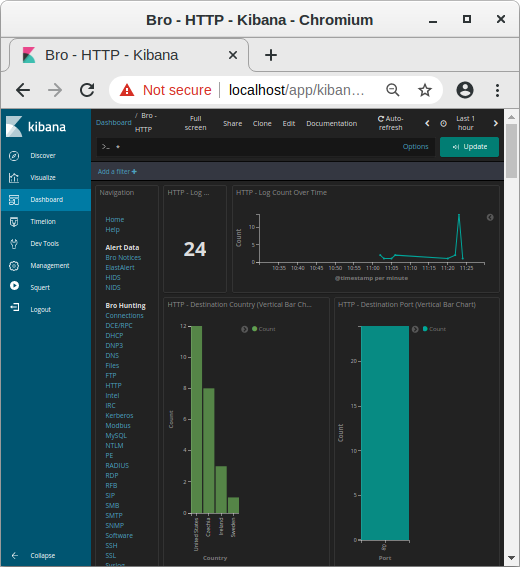

Image: MIME types in Kibana

Image: MIME types in Kibana

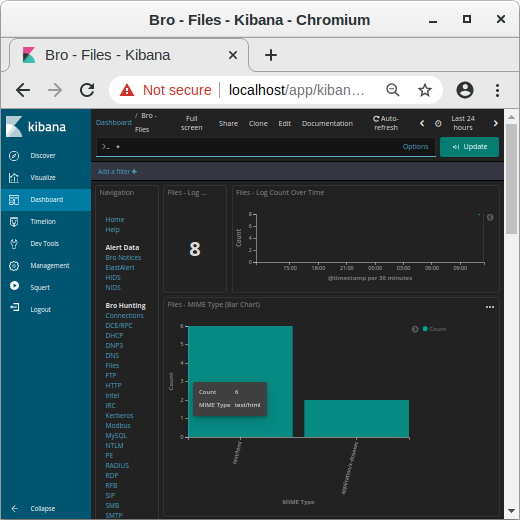

Image: NIDS alerts from payload in decrypted traffic shown in Kibana

Image: NIDS alerts from payload in decrypted traffic shown in Kibana

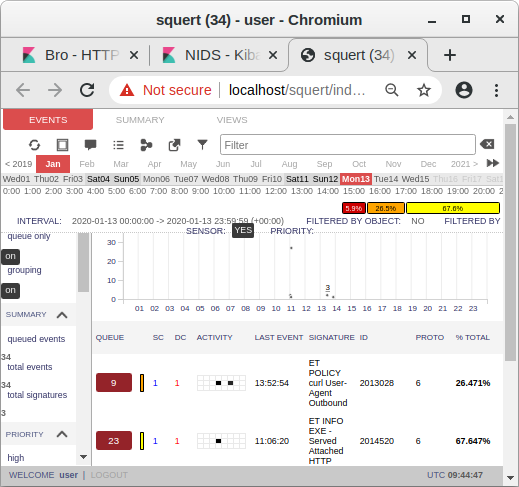

Image: Snort alerts from decrypted traffic shown in Squert

Image: Snort alerts from decrypted traffic shown in Squert

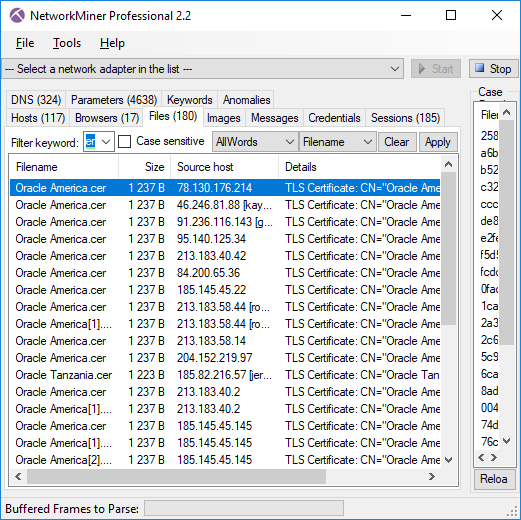

Image: Files extracted from ADT’s PCAP files that mach “Oracle” and “cer”.

Image: Files extracted from ADT’s PCAP files that mach “Oracle” and “cer”.

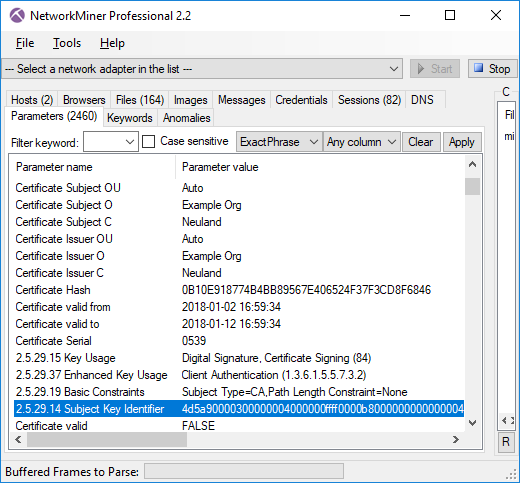

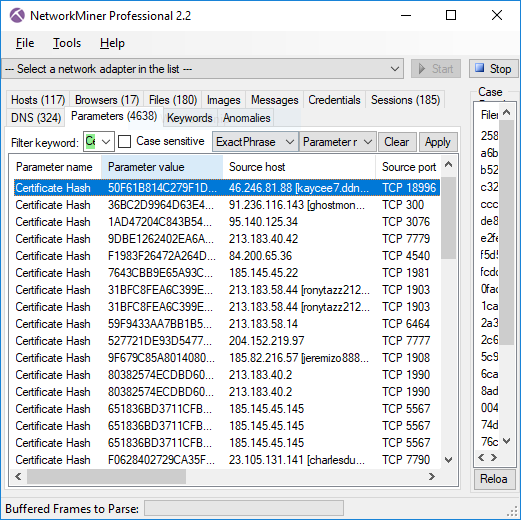

Image: Certificate Hash values found in Adwind RAT’s SSL traffic

Image: Certificate Hash values found in Adwind RAT’s SSL traffic

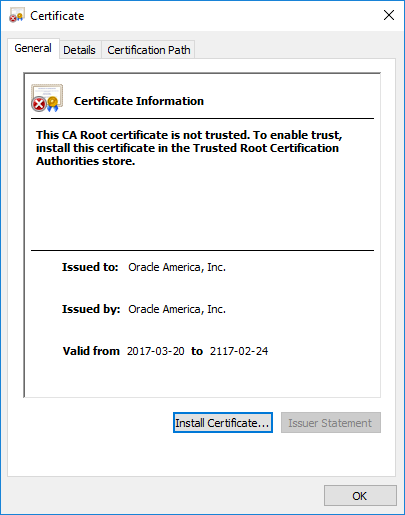

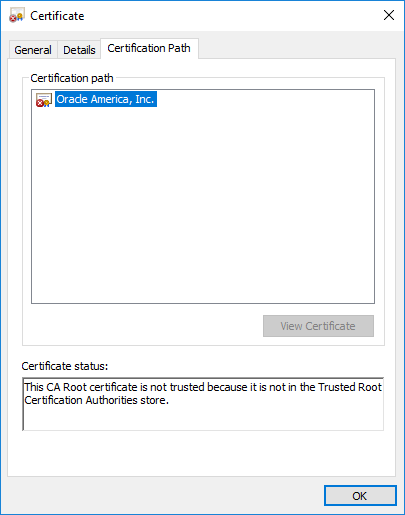

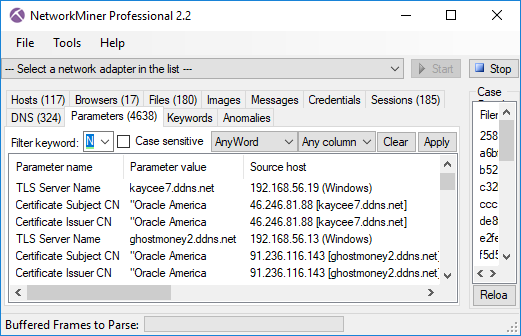

Image: TLS Server Name (aka SNI) and Subject CN values don’t match for AdwindRAT

Image: TLS Server Name (aka SNI) and Subject CN values don’t match for AdwindRAT

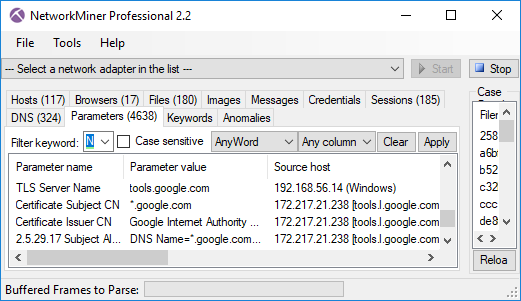

Image: TLS Server Name (SNI) with matching Subject CN from Google.

Image: TLS Server Name (SNI) with matching Subject CN from Google.