CapLoader 2.0 Released

I am thrilled to announce the release of CapLoader 2.0 today!

This major update includes a lot of new features, such as a QUIC parser, alerts for threat hunting and a feature that allow users to define their own protocol detections based on example network traffic.

User Defined Protocols

CapLoader's Port Independent Protocol Identification feature can currently detect over 250 different protocols without having to rely on port numbers. This feature can be used to alert on rogue services like SSH, FTP, VPN and web servers that have been set up on non-standard ports to go unnoticed. But what if you want to detect traffic that isn’t using any of the 250 protocols that CapLoader identifies? CapLoader 2.0 includes a fantastic solution that solves this problem! Simply right-click a flow containing the traffic you want to identify and select “Define protocol from flow”. This creates a custom local protocol detection model based on the selected traffic.

CapLoader’s protocol identification feature may seem like magic, but it actually relies on several different statistical measurements of the traffic in order to build a model of how the protocol behaves. It's possible to define a protocol model from just a single flow, but doing so may lead to poor detection results, which is why we recommend defining protocols from at least 10 different flows. You can do this either by selecting multiple flows or services before clicking “Define protocol from” or by adding additional flows or services to a protocol model at a later point by clicking “Add flow to protocol definition”.

More Malware Protocols Detected

There are several malware C2 protocols among CapLoader’s built-in models for protocol identification. The 2.0 release has been extended to detect even more malware protocols out of the box, such as Aurotun Stealer, PrivateLoader, PureLogs, RedTail, ResolverRAT, SpyMAX, SpyNote and ValleyRAT.

These protocols can now be detected using CapLoader regardless which IP address or port number the server runs on.

QUIC Parser

CapLoader now parses the QUIC protocol, which typically runs on UDP port 443 and transports TLS encrypted HTTP/3 traffic. CapLoader doesn’t decrypt the TLS encrypted HTTP/3 traffic though, it only parses the initial QUIC packets containing the client’s TLS handshake to extract the target domain name from the SNI extension and generates JA3 hashes and JA4 fingerprints of the client’s TLS handshake.

Image: QUIC traffic from Active Countermeasures

- Merlin C2 JA3: 203c2306834e5bf5ace01fb74ad1badf

- Merlin C2 JA4: q13i0311h3_55b375c5d22e_c183556c78e2

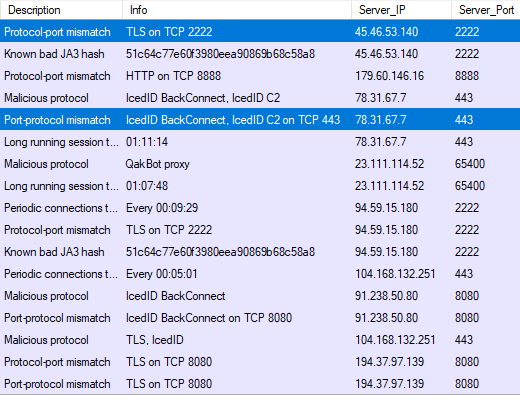

More Alerts

There’s a fantastic service called ThreatFox, to which security researchers, incident responders and others share indicators of compromise (IOC). Many of the shared IOCs are domain names and IP addresses used by malware for payload delivery, command-and-control (C2) or data exfiltration. Various IOC lists can be downloaded from ThreatFox, so that they can be used by a DNS firewall or a TLS firewall to block malware traffic. But the IOCs can also be used for alerting and threat hunting. CapLoader downloads two IOC lists from ThreatFox when the tool is started (the data is then cached for 24 hours, so that no new download is needed until the next day). Analyzed network traffic is then matched against these downloaded offline databases to provide alerts whenever there is traffic to a domain name or IP address that has been reported to be associated with malware.

Image: Alerts for traffic to Lumma Stealer and Remcos servers listed on ThreatFox

We’ve also added two additional alert types in this release, one for anomalous TLS handshakes, and one for connections to suspicious domains. Both these alerts are designed primarily for threat hunting, since there’s a considerable risk that they will alert on legitimate traffic. The anomalous TLS handshake alert tries to detect odd TLS connections that are not originating from the user’s web browser or the operating system. The alert is triggered when such odd connections are made to domain names that are not well-known. This alert logic is designed to generically detect any TLS encrypted malware traffic, where the malware is using a custom TLS library instead of relying on operating system API calls for establishing encrypted connections. But this logic might also lead to false positive alerts, for example when legitimate applications use custom TLS libraries to perform tasks like checking a license or looking for software updates. The suspicious domain alert looks for connections to domain names like devtunnels.ms, ngrok.io and mocky.io, which are often used by APTs as well as crime groups.

Metrics for VPN Detection

CapLoader 2.0 displays the TCP MSS values on the Hosts tab. This value can help with determining if a host is behind a VPN. An MSS value below 1400 indicates that the host’s traffic might pass through some form of overlay network, such as a tunnel or VPN. Other indicators that can help identify VPN and tunnelled traffic is IP TTL and latency, which CapLoader also displays in the hosts tab.

Improved User Experience

A lot of effort has been put into improving the user interface and general user experience for this new CapLoader release. One very important user experience factor is the responsiveness of the user interface, which has been significantly improved. Actions like sorting and filtering flows, services or alerts in CapLoader now complete around 10 times faster than before, which is very noticeable when working with multi-gigabyte capture files. Another improvement related to working with large capture files is that CapLoader now uses significantly less memory.

The transcript window in CapLoader has also received a touch-up. There is now, for example a search box that allows you to quickly find a particular keyword in a TCP or UDP transcript (thanks to Allan Christensen at SektorCERT for the idea). Actions in the transcript window, such as changing encoding or flipping up/down between flows, now also complete much faster than before.

CapLoader automatically saves the packets from selected flows or services to a pcap file in the %TEMP% directory every time the selection changes. This pcap file can be accessed from the “PCAP” icon in the top-right of the user interface. Simply drag-and-drop from CapLoader’s PCAP icon to Wireshark or NetworkMiner to open the filtered traffic. Several users have requested the ability to also perform this drag-and-drop operation directly from the selected rows. I’m happy to say that this is now possible, but you have to perform the drag-and-drop with the middle mouse button (such as the scroll wheel). Users without a middle mouse button can drag-and-drop selected rows by holding down the Ctrl key while drag-and-dropping with the right mouse button instead.

Free Trial vs Commercial Version

Many of CapLoader’s features, such as port-independent protocol identification, are only available in the commercial version of CapLoader. But the free trial version of CapLoader does include new features like the QUIC parser and alerts for suspicious domains and alerts whenever a domain name is listed on ThreatFox.

Image: Alerts on malicious and suspicious traffic in Trial version of CapLoader

Updating to CapLoader 2.0

Users who have already purchased a license for CapLoader can download a free update to version 2.0 from our customer portal or by clicking “Check for Updates” in CapLoader’s Help menu.

Posted by Erik Hjelmvik on Monday, 02 June 2025 13:47:00 (UTC/GMT)

Tags: #CapLoader #QUIC #Threat Hunting #ThreatFox

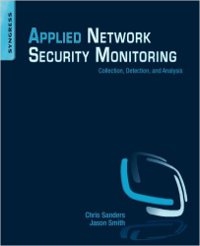

Image:

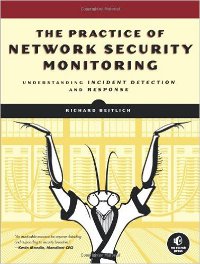

Image: