Carving Packets from Memory

Someone who says "We're gonna pull the packet captures out of the router" probably has no clue how to capture network traffic. In the Lindell case, statements like these were results of an elaborate hoax.

Nevertheless, such a statement doesn't have to be nonsense — if it comes from someone who knows how to dump the physical memory from the router. There are actually more packets available in the RAM of a router, or computer for that matter, than you might think.

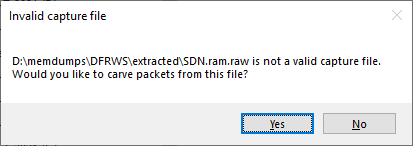

The Forensic Challenge from DFRWS 2016 contains a memory dump from an SDN switch. If you drag-and-drop SDN.ram.raw from that challenge to CapLoader then you'll be asked if you wanna carve packets from the memory dump.

This packet carving feature is also available in the free trial version of CapLoader.

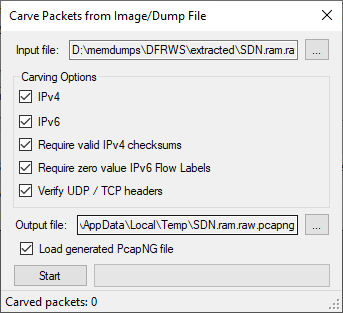

Clicking "Yes" in the dialogue brings up a configuration window. The default settings are okay in most cases.

After pressing "Start" CapLoader will start identifying packets in the memory dump from the SDN switch. The packets will be saved to a Pcap-NG file located in the %TEMP% directory, unless you specified a different output location in the config window.

You can download a copy of the Pcap-NG file that I generated with CapLoader 1.9.2 here:

https://media.netresec.com/pcap/SDN.ram.raw.pcapng (661 kB, 2959 packets)

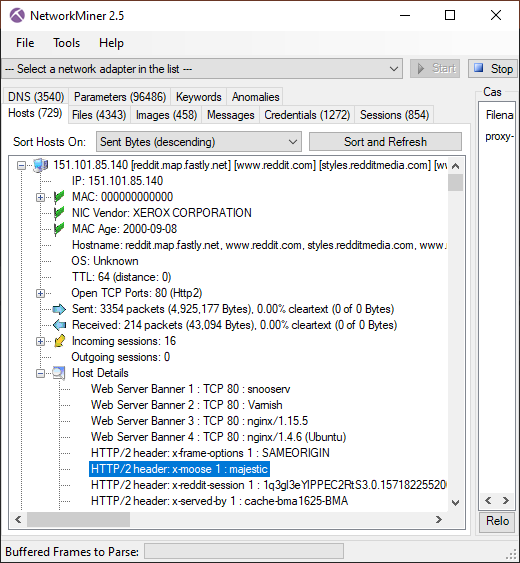

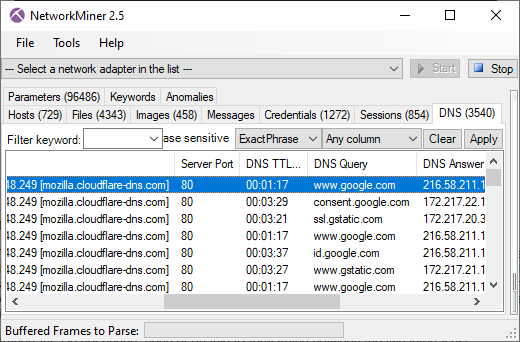

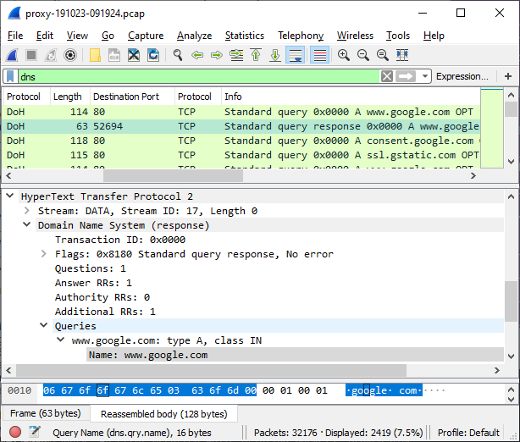

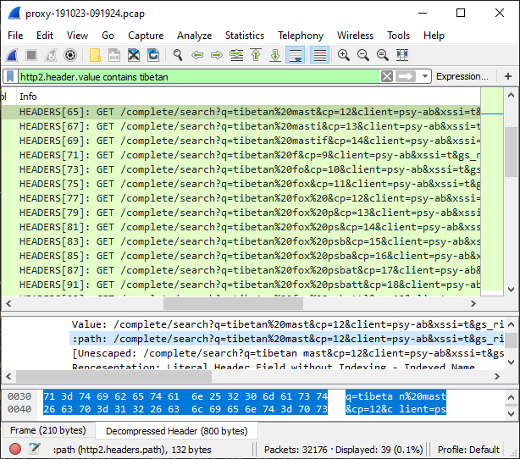

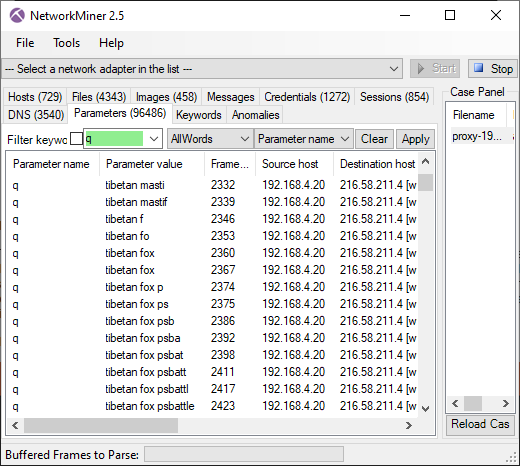

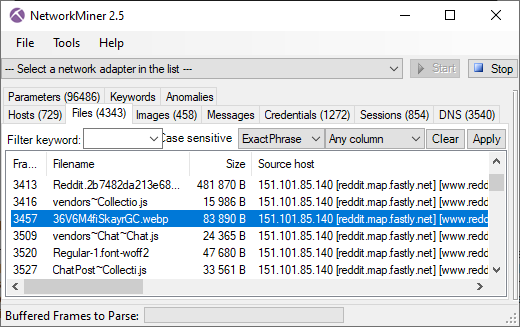

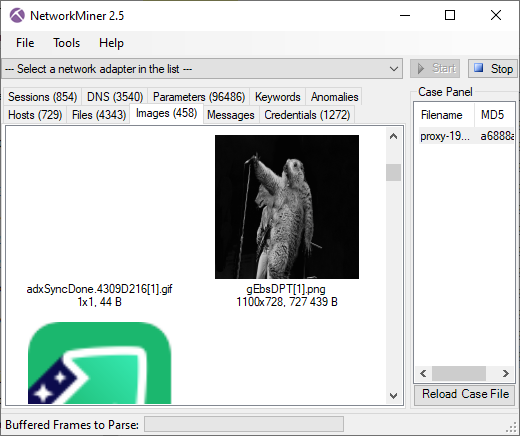

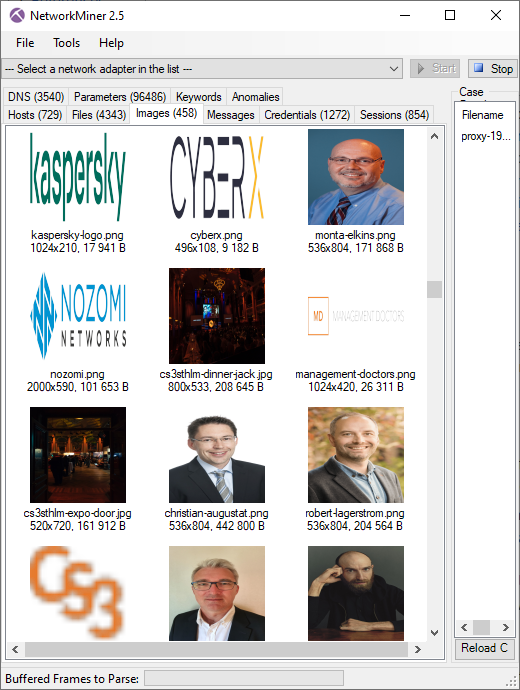

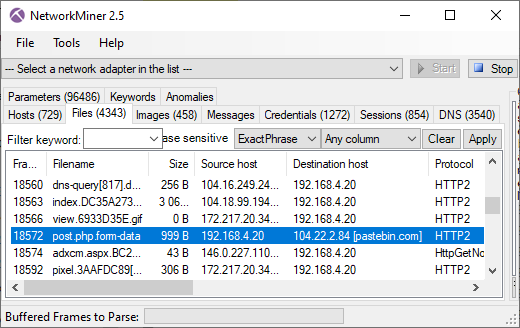

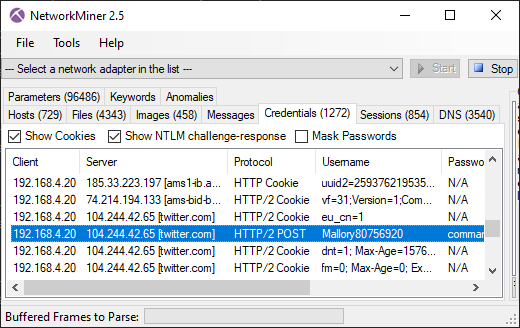

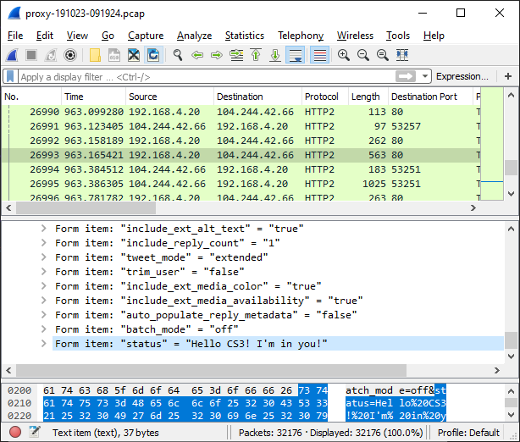

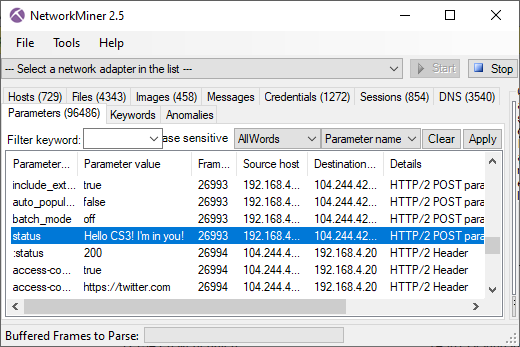

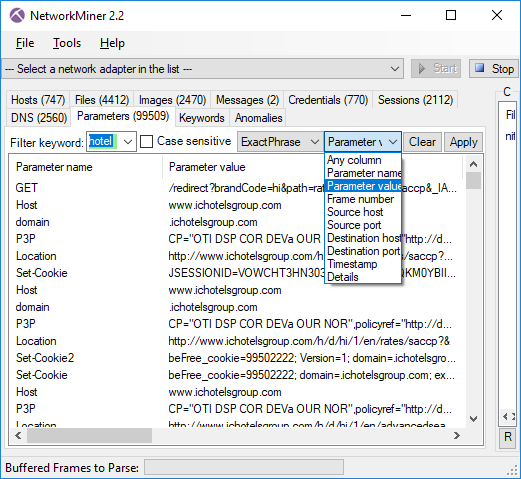

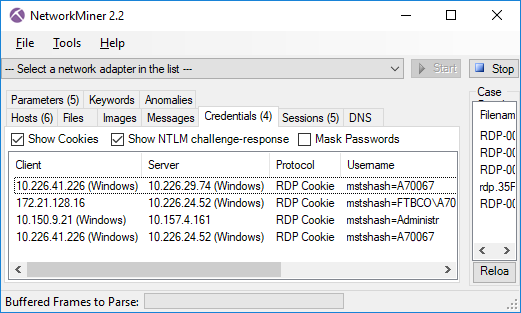

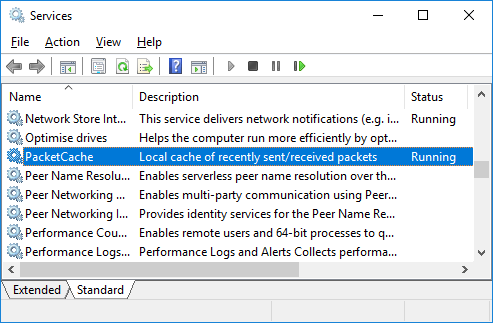

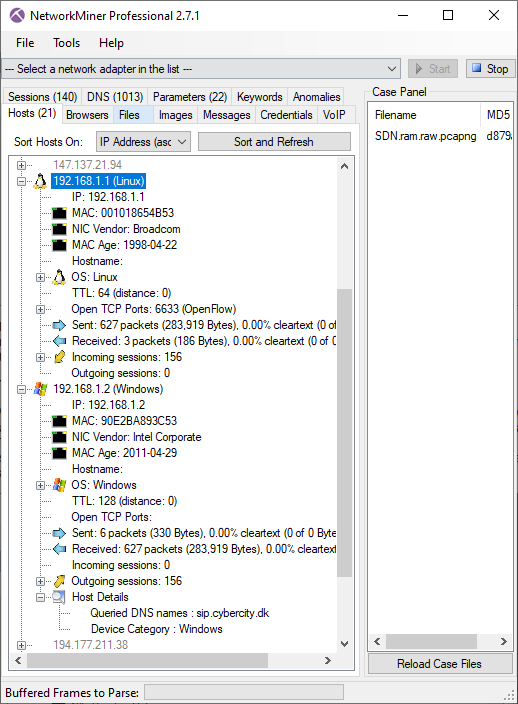

Here's what it looks like when the carved packets have been loaded into NetworkMiner Professional.

As you can see, a great deal of information can be extracted about the hosts on this network just by examining the dumped memory from the SDN switch.

What about Bulk Extractor?

Simson Garfinkel's bulk_extractor can also extract packets from memory dumps. It was actually a research paper by Simson that inspired me to implement a packet carver in the first place.

There are a few significant differences between bulk_extractor and CapLoader with regards to packet carving though. One difference is that bulk_extractor identifies network packets by looking for Ethernet frames containing IPv4 packets, while CapLoader looks for IPv4 or IPv6 packets containing TCP or UDP packets. The output from bulk_extractor is usually quite similar to that of CapLoader, and so is the parsing speed. CapLoader was just slightly faster in our tests and carved about 3% more packets compared to bulk_extractor, these additional packets were primarily IPv6 packets and packets that weren't encapsulated by an Ethernet frame.

Where can I download memory dumps?

I posted a question on Twitter, asking the #DFIR community for their favorite publicly available memory dumps prior to writing this blog post, and I received lots of great answers. Thank you all for contributing! I have now compiled the following list of places from where you can download memory dumps:

- Memory Images from the Stolen Szechuan Sauce case by James Smith

- Digital Corpora 2018 Lone Wolf Scenario by Thomas Moore

- Digital Corpora 2009 M57 Patents Scenario by Naval Postgraduate School

- DFRWS 2005 Challenge

- DFRWS 2008 Challenge

- DFRWS 2016 Challenge (SDN switch)

- CTF-styled memory forensic challenges by Abhiram Kumar

- Mini Memory CTF contest from 2020 by 13Cubed

- Web Server Case by Ali Hadi

- Locky Ransomware infection by Jiří Vinopal

- Memory images from the Art of Memory Forensics (book) by the Volatility Team

- Memory Forensic challenges on CyberDefenders

- GrrCON 2015 CTF by Wyatt Roersma

- GrrCON 2017 Memory Dumps by Wyatt Roersma

- GrrCON 2019 CTF by Wyatt Roersma

For a more detailed blog post on CapLoader's packet carving functionality, please see our Carving Network Packets from Memory Dump Files blog post from 2014.

Posted by Erik Hjelmvik on Tuesday, 31 August 2021 15:10:00 (UTC/GMT)

Tags: #Forensics #RAM #PCAP #Pcap-NG #PcapNG #DFIR #carve #carver #packets #dump #CapLoader #memory forensics #DFRWS