Don't Delete PCAP Files - Trim Them!

We are happy to release TrimPCAP today! TrimPCAP is a free open source tool that reduces the size of capture files in an intelligent way.

The retention period of a packet capture solution is typically limited by either legal requirements or available disk space. In the latter case the oldest capture files are simply removed when the storage starts getting full. This means that if there is a long ongoing session, such as a download of a large ISO file, streamed video or a reverse shell backdoor, then the start of this session will likely be removed.

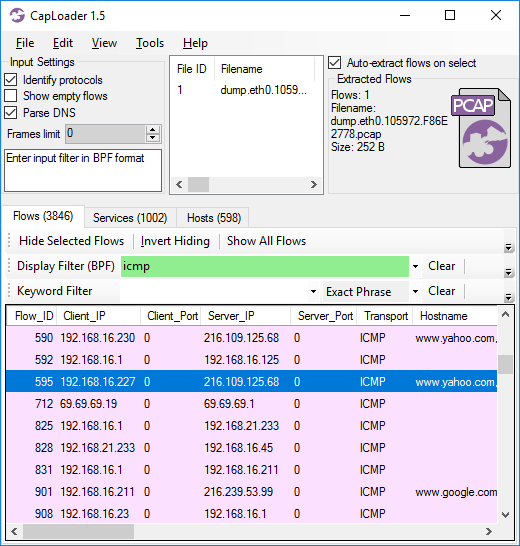

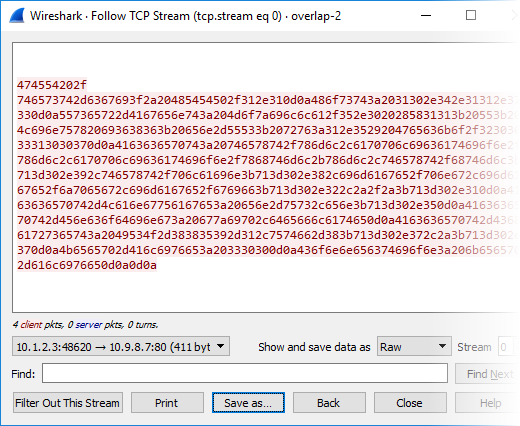

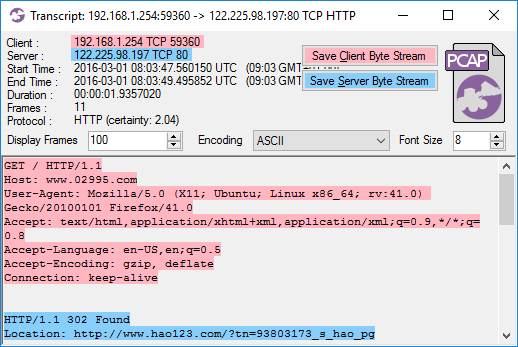

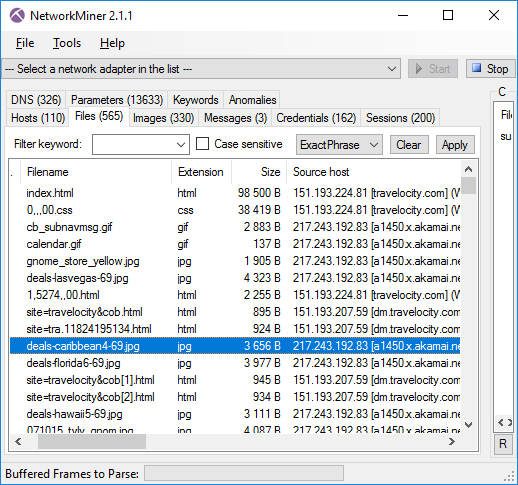

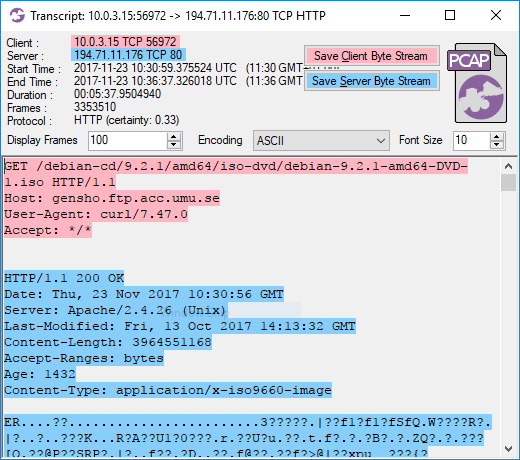

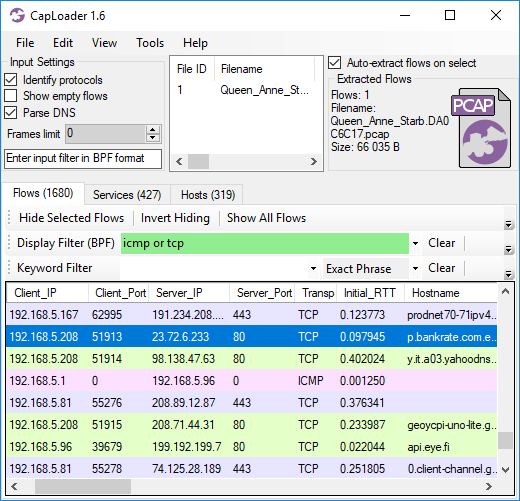

I know from experience that it’s painful to analyze network traffic where the start of a session is missing. The most important and interesting stuff generally happens in the beginning of each session, such as the HTTP GET request for an ISO file. As an analyst you don’t need to look at all the other packets in that ISO download (unless you believe the ISO contains malware), it’s enough to see that there is a GET request for the file and the server responds with a “200 OK”.

Image: CapLoader transcript of an ISO download

If that download had been truncated, so that only the last few packets were remaining, then it would be really difficult to know what was being downloaded. The same is true also for other protocols, including proprietary C2 protocols used by botnets and other types of malware.

✂ TrimPCAP

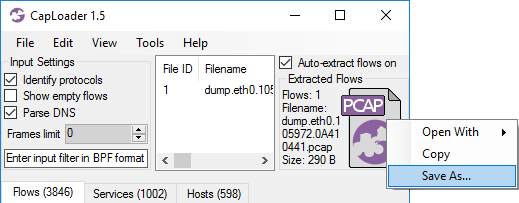

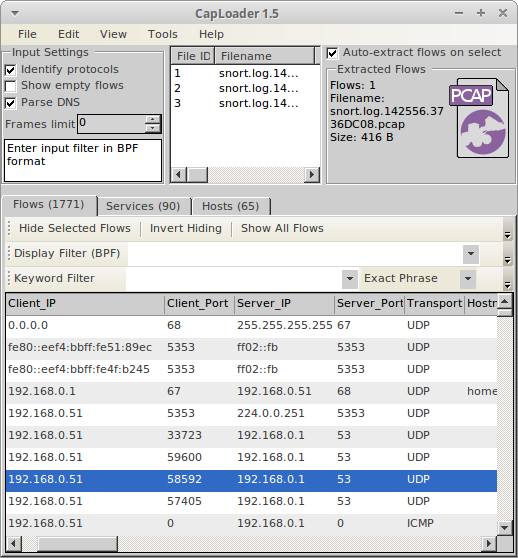

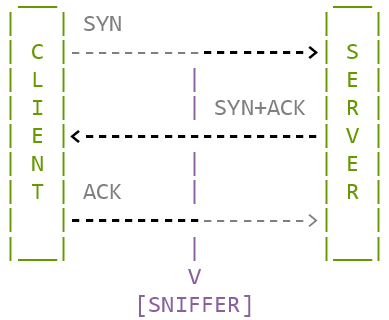

TrimPCAP is designed to overcome the issue with truncated sessions by removing data from the end of sessions rather than from the beginning. This also comes with a great bonus when it comes to saving on disk usage, since the majority of the bytes transferred across the Internet are made up of big sessions (a.k.a “Elephant Flows”). Thus, by trimming a PCAP file so that it only contains the first 100kB of each TCP and UDP session it’s possible to significantly reduce required storage for that data.

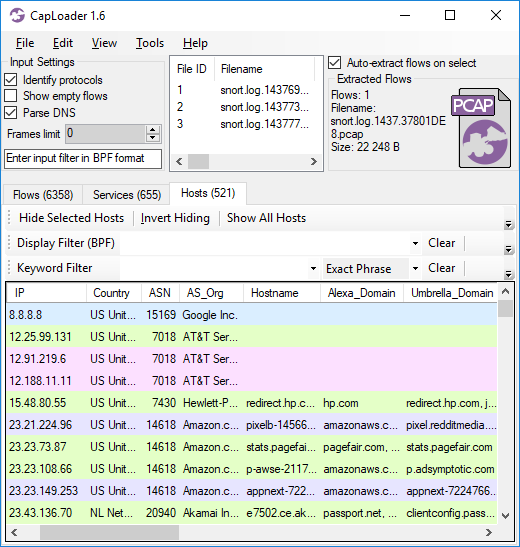

The following command reduces the PCAP dataset used in our Network Forensics Training from 2.25 GB to just 223 MB:

user@so$ python trimpcap.py 102400 /nsm/sensor_data/so-eth1/dailylogs/*/*

Trimming capture files to max 102400 bytes per flow.

Dataset reduced by 90.30% = 2181478771 bytes

user@so$

A maximum session size (or "flow cutoff") of 100kB enables trimpcap.py to reduce the required storage for that dataset to about 10% of its original size, which will significantly extend the maximum retention period.

Putting TrimPCAP Into Practice

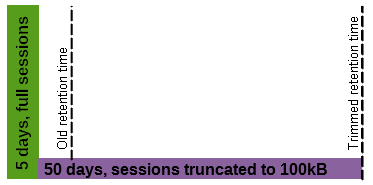

Let’s assume that your organization currently has a maximum full content PCAP retention period of 10 days and that trimming sessions to 100kB reduces the required storage to 10%. TrimPCAP will then enable you to store 5 days with full session data plus 50 additional days with trimmed sessions using the same disk space as before!

A slightly more advanced scheme would be to have multiple trim limits, such as trimming to 1MB after 3 days, 100kB after 6 days and 10kB after 30 days. Such a setup would probably extend your total retention period from 10 days to over 100 days.

An even more advanced trimming scheme is implemented in our packet capture agent PacketCache. PacketCache constantly trims its PCAP dataset because it is designed to use only 1 percent of a PC’s RAM to store observed packets, in case they are needed later on for incident response. PacketCache uses a trim limit which declines individually for each observed TCP and UDP session depending on when they were last observed.

Downloading TrimPCAP

TrimPCAP is open source software and is released under the GNU General Public License version 2 (GPLv2).

You can download your own copy of TrimPCAP from the official TrimPCAP page:

https://www.netresec.com/?page=TrimPCAP

Happy trimming!

✂- - - - - - - - -

Posted by Erik Hjelmvik on Tuesday, 05 December 2017 12:40:00 (UTC/GMT)

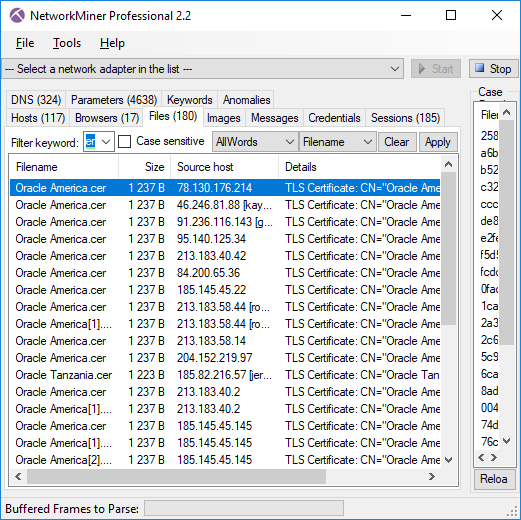

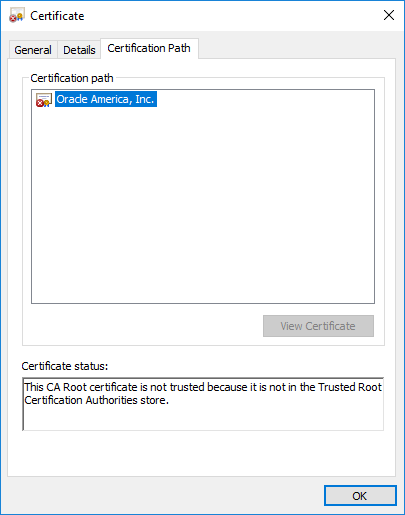

Image: Files extracted from ADT’s PCAP files that mach “Oracle” and “cer”.

Image: Files extracted from ADT’s PCAP files that mach “Oracle” and “cer”.

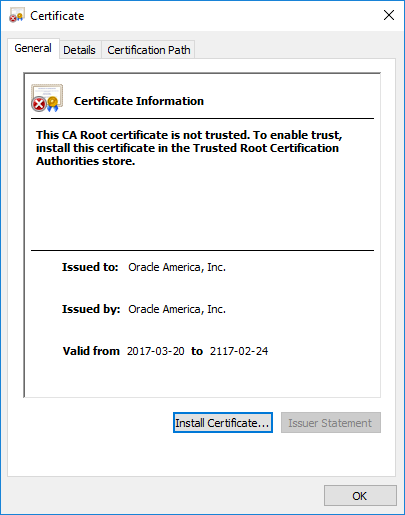

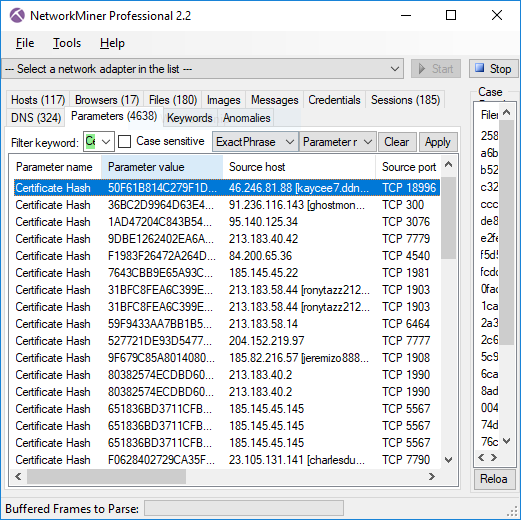

Image: Certificate Hash values found in Adwind RAT’s SSL traffic

Image: Certificate Hash values found in Adwind RAT’s SSL traffic

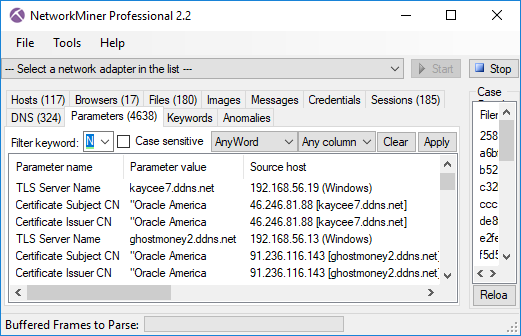

Image: TLS Server Name (aka SNI) and Subject CN values don’t match for AdwindRAT

Image: TLS Server Name (aka SNI) and Subject CN values don’t match for AdwindRAT

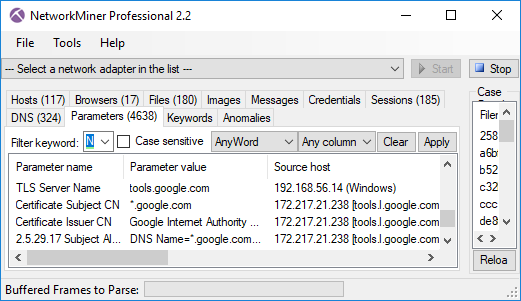

Image: TLS Server Name (SNI) with matching Subject CN from Google.

Image: TLS Server Name (SNI) with matching Subject CN from Google.